Fair and unbiased recruiting is essential for creating diverse and inclusive workplaces. A study published in The New York Times reveals significant employment discrimination. Researchers conducted an extensive audit by sending 80,000 fake résumés to major U.S. companies. It found that presumed white applicants got callbacks 9.5% more often than presumed Black applicants1. It highlights the persistent biases that still exist in hiring today.

Addressing these biases is crucial to ensure equal opportunities for all candidates. AI can help reduce bias. It can standardize evaluations, focus on qualifications, and create a fairer hiring process.

In this article, we will explore effective strategies to reduce bias in recruiting, uncovering how AI can create equal opportunities for all candidates.

Importance of Reducing Bias in Recruiting

Bias in recruiting occurs when favoritism or prejudice unfairly influences hiring. It often involves factors unrelated to a candidate’s qualifications. Reducing bias is key. It boosts diversity, inclusion, and hiring effectiveness.

In the 2023 Deloitte Global Human Capital Trends survey, over 80% of organizations identified reducing recruitment bias through purpose, diversity, equity, inclusion (DEI), sustainability, and trust as key focus areas.

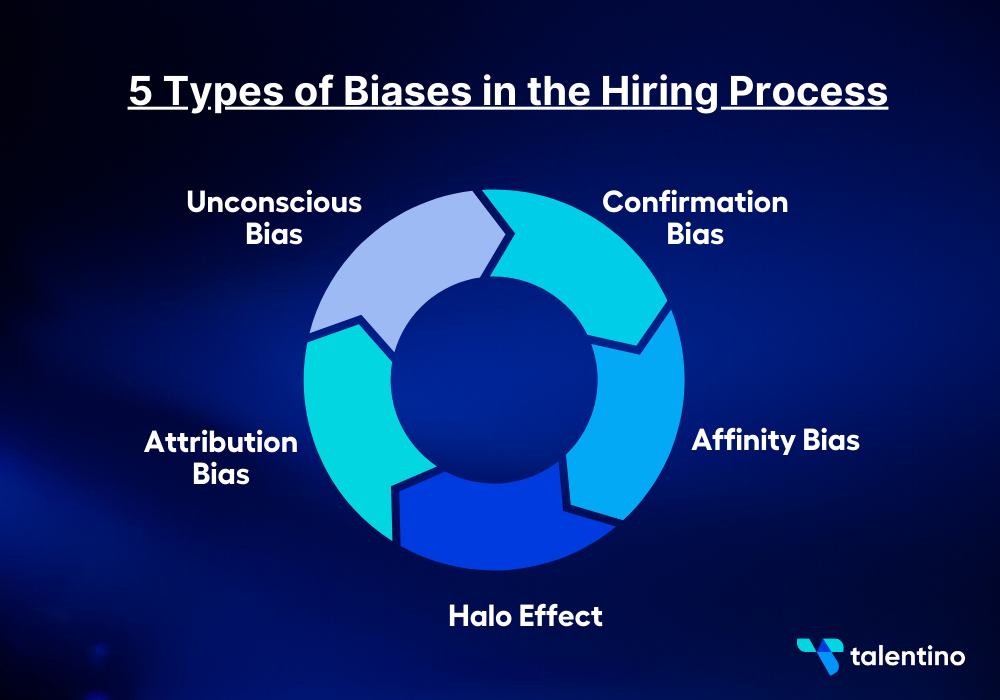

5 Types of Biases in the Hiring Process

Biases can show up in many ways during recruitment. They affect decisions from resume screening to final interviews. Below are the 5 most common types of biases encountered in hiring.

1. Unconscious Bias

Unconscious bias occurs when hiring managers rely on stereotypes. It can cause significant disparities in how candidates are evaluated. Studies show that resumes with “white-sounding” names get more callbacks than those with “African-American” names. It highlights the widespread impact of bias in recruitment.

2. Confirmation Bias

Confirmation bias is when a recruiter prefers information that supports their beliefs and ignores information that contradicts them. It might mean a recruiter focuses only on qualifications that fit their idea of a “good candidate,” potentially missing out on better-suited candidates. This bias can narrow the evaluation process and lead to poor hiring decisions.

3. Affinity Bias

Affinity bias occurs when recruiters prefer candidates with similar backgrounds, interests, or traits. It can result in a team that needs more diversity. A hiring manager might prefer candidates from the same university or with similar hobbies. It could lead them to overlook highly qualified people from different backgrounds.

4. Halo Effect

The halo effect is a bias. A positive view of a candidate affects judgments about their skills and qualifications. For example, if a candidate impresses an interviewer with their personality, the interviewer might unconsciously rate their technical skills more favorably. It would be despite a need for more solid evidence to support it. This bias can lead to hiring decisions based on superficial traits rather than true competencies.

5. Attribution Bias

Attribution bias involves the tendency to credit a candidate’s successes to external factors while blaming their failures on personal shortcomings.

For example, if a candidate performs well in an interview, an interviewer might attribute this to luck rather than skill. At the same time, poor performance reflects the candidate’s capabilities. This bias can lead to unfair assessments and missed opportunities for deserving candidates.

7 Strategies for Reducing Bias in Recruiting with AI

| Strategy for Reducing Bias with AI | Description | Stat/Example |

| Blind Recruitment Tools | Anonymize candidate details | Increases women’s hiring by 25-46% (Harvard/Princeton study) |

| Algorithmic Decision-Making | Assess skills and experience only | 20% improvement in performance (McKinsey study) |

| Structured Interview Assessments | Standardize interview questions and scoring | 81% more effective (Research) |

| Bias Detection and Correction | Detect and adjust for biases | Reduces discouraged applicants by 25% |

| Diverse Data Training | Train AI on diverse datasets | 30% more fair decisions (Accenture report) |

| Continuous Monitoring and Feedback | Ongoing bias monitoring and adjustments | 30% diversity improvement (Research) |

| Inclusive AI Design | Develop AI with diverse teams | 25% more fair outcomes (MIT Media Lab study) |

Let’s discuss seven strategies for reducing bias in recruiting with AI.

1. Blind Recruitment Tools

Blind recruitment tools that use AI can hide candidates’ details like names, gender, and photos, making sure that only their qualifications are considered. This greatly reduces the chance of unconscious bias affecting hiring decisions.

The idea of blind evaluations isn’t new—it started with blind auditions, first used by the Boston Symphony Orchestra in 1952 to bring more diversity to their mostly white male group. A study by Harvard and Princeton found that these auditions increased the chances of women being hired by 25–46%, showing how removing personal details can lead to fairer decisions.

2. Algorithmic Decision-Making

AI algorithms can assess candidates on their skills and experience. They will not consider demographic traits. This method helps create a level playing field, as AI focuses on the data that matters most for the job.

AI helps companies create job postings and personalize communication with candidates. It uses skill profiles, keywords, and old postings to generate content. According to a study posted by McKinsey, an automotive company even uses AI avatars to give feedback to applicants. The biggest impact of AI in HR is seen in talent acquisition and onboarding, accounting for about 20% of its value.

3. Structured Interview Assessments

AI can standardize interview questions and scoring. It will ensure that the same criteria judge every candidate. It reduces subjective judgments and ensures consistency. Research shows that structured interviews are 81% more effective at identifying the best candidates than unstructured ones.

4. Bias Detection and Correction

AI can detect and flag biases in job descriptions, interviews, and candidate evaluations. It allows for real-time adjustments. AI tools can identify and remove these biased words, helping to attract a broader and more diverse group of applicants.

5. Diverse Data Training

Training AI models on diverse datasets is essential to minimize the risk of replicating existing biases. AI is more likely to make inclusive recommendations if it learns from diverse demographic, geographical, and professional data. A report by Accenture suggests that AI trained on diverse data is 30% more likely to make fair and unbiased decisions.

6. Continuous Monitoring and Feedback

AI systems that monitor recruiting for bias can help. They can provide feedback to refine processes over time. For example, research found that organizations using AI to monitor and analyze their hiring practices reported a 30% improvement in the diversity of their hires. This continuous monitoring ensures that biases are identified and addressed, leading to a more inclusive hiring process.

7. Inclusive AI Design

Collaborating with diverse teams when developing AI tools is essential. It ensures that the algorithms incorporate multiple perspectives, significantly reducing the risk of embedding biases. A study by MIT Media Lab found that AI systems developed with input from diverse teams are 25% more likely to produce fair outcomes.

Case Studies

Here are brief case studies highlighting their approaches and outcomes.

Unilever

Unilever is a global consumer goods firm that had to process many job applications. They aimed to reduce bias in selecting candidates. They introduced an AI-based recruitment process to address this. It included digital interviews and online tests.

Candidates participated in games designed to assess their intrinsic qualities, followed by AI-analyzed video interviews that evaluated facial expressions and speech patterns. This method streamlined recruitment and created a more diverse candidate pool. The AI tools assessed only skills and potential, not backgrounds. It made the process fairer.

Hilton Hotels

Hilton Hotels implemented AI-powered interview platforms to enhance their recruitment process. Hilton focused on personal skills vital to the hospitality industry. It makes them faster, and more objective hiring decisions. The AI tools helped reduce bias by relying on data-driven criteria for candidate selection.

However, Hilton knew the value of a personal touch in recruitment. So, it balanced tech with human interaction to ensure a good candidate experience.

Conclusion

Reducing bias in recruiting isn’t just about fairness. It’s about building a stronger, diverse workforce that drives better results. Companies can create a more objective and inclusive hiring process using AI tools.

Blind recruitment, structured interviews, and constant monitoring can help. They ensure that candidates are judged on their true abilities, not biased views. As businesses adopt these technologies, they can improve recruitment. They can attract more talent and foster a diverse, inclusive culture.

Ready to make your hiring process more inclusive and unbiased? Learn how Talentino’s AI-driven recruitment tools can help you reduce bias and attract top talent. Sign up with us today and transform your recruitment strategy for a fairer, more diverse workforce!

FAQs

- How can AI reduce bias in recruitment?

AI can reduce bias by:

Anonymizing candidate details.

Standardizing interview processes.

Detecting biased language in job descriptions. - How can we eliminate bias in AI?

To eliminate bias in AI, we must:

Train models on diverse datasets.

Involve diverse teams in development.

Continuously monitor and adjust the AI for fairness. - How do you remove bias from recruitment?

Remove bias by using blind recruitment, standardizing interview questions, and AI tools to find and fix biases in hiring. - How can AI improve recruitment?

AI improves recruitment. It streamlines candidate screening. It enhances decision-making and reduces time-to-hire. It also increases diversity by minimizing bias. - How does artificial intelligence help to mitigate bias in the hiring process?

AI helps mitigate bias by anonymizing candidate details, standardizing evaluations, and detecting biased language, making the recruitment process more objective and fair. - What are the potential risks of bias in AI recruitment tools?

AI recruitment tools can perpetuate bias if trained on biased data, leading to unfair hiring practices. It’s crucial to ensure responsible AI development to minimize these risks. - How can machine learning be used to reduce bias in hiring?

Machine learning can analyze large datasets to identify patterns of bias and make adjustments in the hiring process, ensuring more inclusive and effective recruitment. - Can AI eliminate human bias in recruiting?

While AI can significantly reduce human bias, it cannot eliminate it. Continuous monitoring and diverse input are needed to tackle AI bias in hiring. - What role do human recruiters play alongside AI in hiring?

Human recruiters provide the necessary judgment and understanding of soft skills and cultural fit, complementing AI’s objective recruitment capabilities to create a balanced hiring process. - How can companies ensure AI software does not lead to biased hiring decisions?

Companies can ensure AI software is fair by training it on diverse datasets, regularly auditing its decisions, and involving diverse teams in AI development to avoid algorithmic bias.